Editor’s take: To be clear, we do not hate Nvidia. Quite the opposite; we have become increasingly convinced of their strong position in the data center since their March 2022 Analyst Day – six months before ChatGPT ignited the AI world. Our title references the 1999 rom-com, which is a modern adaptation of Shakespeare. While we wouldn’t say we love Nvidia, we have a high degree of conviction that they will continue to lead in data center silicon for the foreseeable future.

That being said, we strive for intellectual honesty, which means the higher our conviction in a thesis, the more we need to test it out. Poke holes. Look for ways in which we could be wrong. In this case, we want to explore all the ways Nvidia might be vulnerable.

Editor’s Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

Looking at this systemically is challenging, as Nvidia positions itself as providing complete solutions, which means all the pieces are tied together. But we will divide our analysis into several buckets and walk through each one.

The chipmakers

The first area is hardware. Nvidia has been executing incredibly well for several years and holds a clear performance advantage. Generally speaking, they dominate the market for AI training. However, the market for cloud inference is expected to be larger, and here, economics will matter more than raw performance. This shift is likely to introduce a lot more competition.

Generally speaking, Nvidia dominates the market for AI training.

The closest competitor here is AMD with their MI300 series. While this hardware is highly performant, it still lacks many features. It is probably good enough to carve out a niche for AMD but seems unlikely to significantly impact Nvidia’s market share for the time being. Intel and its recently a launched Gaudi 3 accelerator is enough to show that Intel is still in the game, but the device is neither programmable nor fully featured, limiting Intel’s ability to challenge Nvidia in certain market segments. Overall, among major chip companies, Nvidia appears to be well-positioned.

Additionally, there are a number of startups going after this market. The most advanced is probably Groq, which has released some fairly impressive inference benchmarks. However, our assessment is that their solution is only suitable for a subset of AI inference tasks. While this might be enough for Groq to remain competitive, it does not pose a threat to Nvidia across large portions of the market.

The hyperscalers

The most serious competition comes from the hyperscalers’ internal silicon solutions.

Google is by far the most advanced in this area. They recently disclosed that they used their TPUs to train the Gemini large language model, the only major crack in Nvidia’s training dominance. But Google is a special case, they control their software stack, allowing them to tailor TPUs very precisely. The other hyperscalers are further behind.

After years of testing everything on the market, Meta has finally launched its own accelerator, following Microsoft who launched theirs last year. Both of these look interesting, but both are also first attempts, and it will take a few generations for these solutions to prove themselves out. All of which reinforces our view that both will continue to rely heavily on Nvidia and AMD for a few more years.

Meanwhile, AWS is on the second generation of their inference and training chips, but these are also relatively behind the curve, and Amazon now seems to be scrambling to buy as much Nvidia output as they can. Their needs stem from the fact that they do not control their software stack; they run their customers’ software, and those customers have a strong preference for Nvidia.

Networking

Another important element in all this is networking hardware. The links between all the servers in a data center are a major constraint on AI models. Nvidia has a major advantage in its networking stack. Much of this comes from their acquisition of Mellanox in 2019, and their low-latency Infiniband solution.

This deal is likely to be remembered as one of the best M&A deals in recent history. However, this advantage is a double-edged sword. Nvidia’s sales of complete systems today is an important part of their revenue growth, and in many use cases, those systems’ advantages rests largely on the networking element.

The Mellanox acquisition is likely to be remembered as one of the best M&A deals in recent history.

Recall that Nvidia admitted networking is the source of their advantage in the inference market. For the moment, Infiniband remains critical to AI deployments, but the industry is pouring an immense amount of effort into the low-latency version of Ethernet, Ultra Ethernet. Should Ultra Ethernet deliver on its promise, by no means guaranteed, that would put pressure on Nvidia in some important areas.

In short, Nvidia faces a large quantity of competitors, but remains comfortably ahead in quality.

CUDA

A big reason for this lead remains its software stack. There are really two sides to Nvidia’s software – its compatibility layer “CUDA”, and the growing array of software services and models it provides to customers.

CUDA is the best known of these, often cited as the basis for the company’s lead in AI compute. We think this oversimplifies the situation. CUDA is really shorthand for a whole host of features in Nvidia chips that make them programmable down to a very low level. From everything we can see, this moat remains incredibly solid.

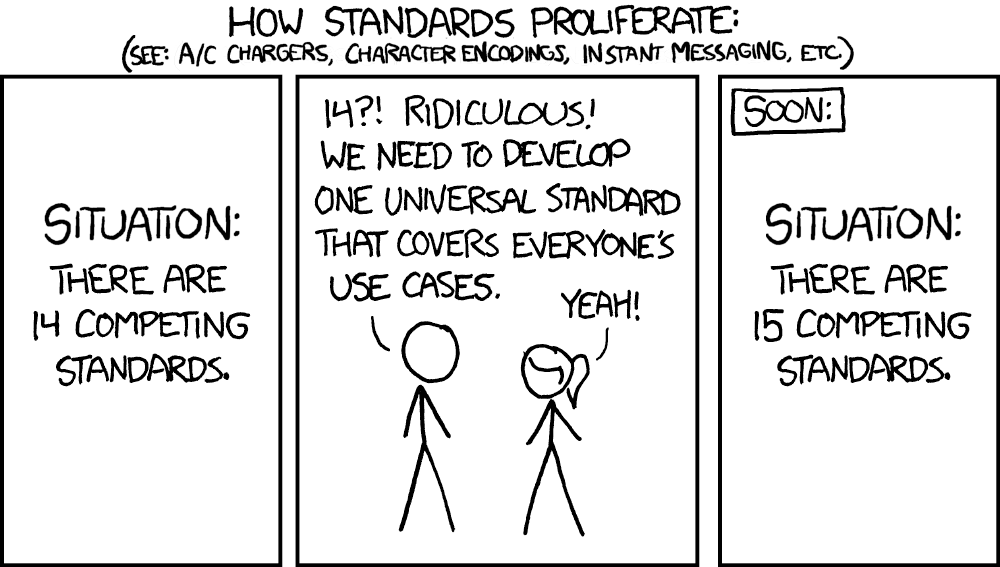

The industry is (finally) becoming aware of the power this software layer provides, and there are many initiatives to provide alternatives. These range from AMD’s ROCm to “open” alternatives like XLA and UXL. If you want a deep dive into this, Austin Lyon wrote a great primer on ChipStrat, which is definitely worth a read.

But the quick summary is that none of those have gained much traction yet, and the sheer array of alternatives risks diluting everyone’s efforts – as usual, XKCD said it best. The heuristic we have been using to evaluate these alternatives is to ask the proponents of each how many chips support the standard currently. Whenever the answer progresses past an awkward silence, we will revisit this position. The biggest threat to Nvidia on this front comes from their own customers. The major hyperscalers are all searching for ways to move away from CUDA, but they are probably the only ones capable of doing so.

More software

Beyond CUDA, Nvidia is also building up a whole suite of other software. These include pre-trained models for a few dozen end markets, composable service APIs (NIMs), and a whole host of others.

These make it much easier to train and deploy AI models, so long as those models run on Nvidia silicon. It is still early days for AI, and should Nvidia gain widespread adoption of these, they will effectively lock in a generation of developers whose entire software stacks rest on top of these services. So far, it is unclear how widespread that adoption is. We know that in some markets, such as pharma and biotech, there is considerable enthusiasm for Nvidia tools, while other markets are still in early evaluation phases.

It is unclear if Nvidia ever plans to charge for these and grow an actual software business, which leads to a larger fundamental question about Nvidia’s competitiveness. As they grow more successful and more prominent, the industry’s discomfort level grows.

Already the data center supply chain is full of grumbling about Nvidia’s pricing, its allocation of scarce parts, and long lead times. Nvidia’s largest customers, the hyperscalers, are highly wary of becoming too reliant on the company, especially as Nvidia seems to waver on the edge of launching its own Infrastructure as a Service (IaaS) offering.

How far will these customers go, how much of Nvidia’s stack will they buy into? There has to be a limit, but companies can often short-circuit long-term strategic thinking for short-term discounts and supply opportunities. The hyperscalers would not have found themselves in this position if they had not abandoned almost every startup that tried to sell them an alternative over the past decade. So, Nvidia definitely faces risks on this front, but for the moment, those risks are largely unformed.

The business model

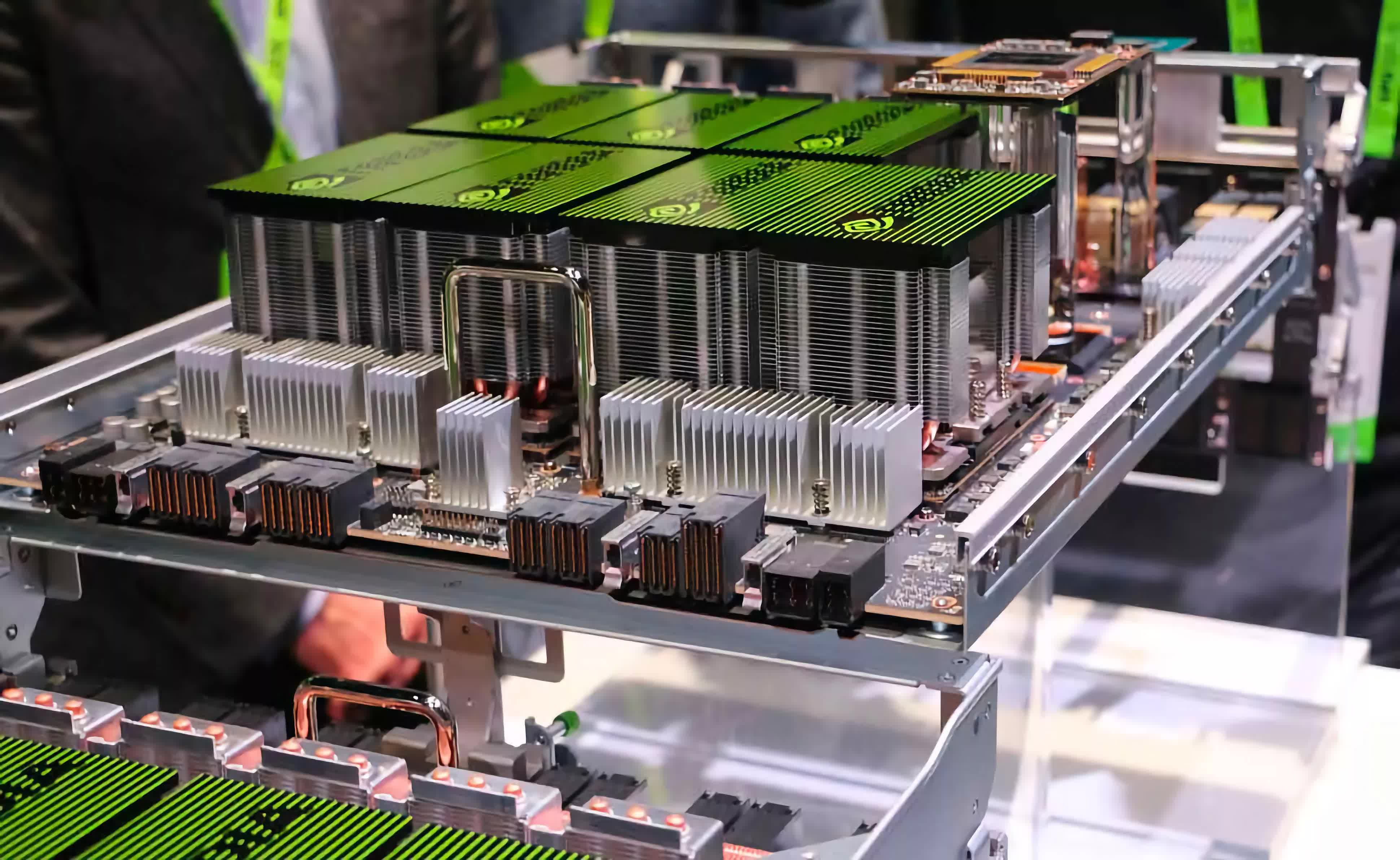

More broadly, we think there are challenges to Nvidia’s overall business model. The company has always sold complete systems, from graphics cards 30 years ago to mammoth DGX server racks today.

As much as the company says it is willing to sell de-composed components, they would clearly prefer to sell complete systems. And this poses a number of problems. As the company’s history demonstrates, when inventory cycles turn down, Nvidia stands at the end of the bullwhip, wreaking havoc on their financials.

Jensen Huang (co-founder and CEO of NVIDIA) pic.twitter.com/e1tQkKKEZ5

– Dripped Out Technology Brothers (@TechBroDrip) February 13, 2023

Given the scope of their recent growth and the ever-larger systems they sell, the risk of a major reset is much larger. To be clear, we are not forecasting this to happen any time soon, but it is worth considering the magnitude of the problem.

Infrastructure as a Service (IaaS)

Which leads us to AI factories. There are now roughly a dozen data center operators, independent of the public cloud IaaS hyperscalers, running warehouses full of Nvidia GPUs. Nvidia has invested in many of these and they are likely a major source of revenue given that their value proposition rests largely on their ability to offer GPU instances on demand.

This is Nvidia’s channel, and is likely to be a source of problems somewhere down the road. In fairness, there is a remote possibility that AI presents such a seismic shift in compute that AI factories become the dominant IaaS providers, but there are a few trillion-dollar companies that would fight a scorched earth war to prevent that from happening.

Next-gen AI

Finally, the ultimate risk hanging over Nvidia is the growth of neural network-based machine learning, a.k.a. AI. So far, the gains from AI are fairly narrow in scope – code generation, digital marketing, and a host of small, under the hood software performance gains.

If you wanted to construct a bear case for Nvidia, it should explore the possibility that AI goes no further.

If you wanted to construct a bear case for Nvidia, it should explore the possibility that AI goes no further. We think that is unlikely, and our sense is that AI can still advance much further, but should it fail to, Nvidia would be left highly exposed.

By the same token (pun intended), AI software is changing so rapidly it is possible that some future genius developer comes up with a superior AI model that shifts compute in a direction where GPUs and Nvidia’s investment matter less. This seems unlikely, but there is still the risk that AI software either stagnates here or advances to the point that it deflates the need for so many massive GPU clusters around the world. This should not be seen as a catastrophe for Nvidia, but it would spark a significant slowdown.

To sum all of this up, Nvidia is in a very strong position, but it is not unassailable.

In our view, their biggest threat comes from them being so successful that it forces its customers to respond. There are multiple futures for Nvidia and the data center, ranging from Nvidia ending up as just one of many competitors in the data center, to Nvidia becoming the master of the universe. There are enough vulnerabilities in its model to make the latter unlikely, but they have so much momentum that the former is no more likely.