Why it matters: AMD is in the unique position of being the primary competitor to Nvidia on GPUs – for both PCs and AI acceleration – and to Intel for CPUs – both for servers and PCs – so their efforts are getting more attention than they ever have. And with that, the official opening keynote for this year’s Computex was delivered by AMD CEO Dr. Lisa Su.

Given AMD’s wide product portfolio, it’s not surprising that Dr. Su’s keynote covered a broad range of topics – even a few unrelated to GenAI (!). The two key announcements were the new Zen 5 CPU architecture (covered in full detail here) and a new NPU architecture called XDNA2.

What’s particularly interesting about XDNA2 is its support for the Block Floating Point (Block FP16) data type, which offers the speed of 8-bit integer performance and the accuracy of 16-bit floating point. According to AMD, it’s a new industry standard – meaning existing models can leverage it – and AMD’s implementation is the first to be done in hardware.

The Computex show has a long history of being a critical launch point for traditional PC components, and AMD started things off with their next-generation desktop PC parts – the Ryzen 9000 series – which don’t have a built-in NPU. What they do have, however, is the kind of performance that gamers, content creators, and other PC system builders are constantly seeking for traditional PC applications. Let’s not forget how important that still is, even in the era of “AI PCs.”

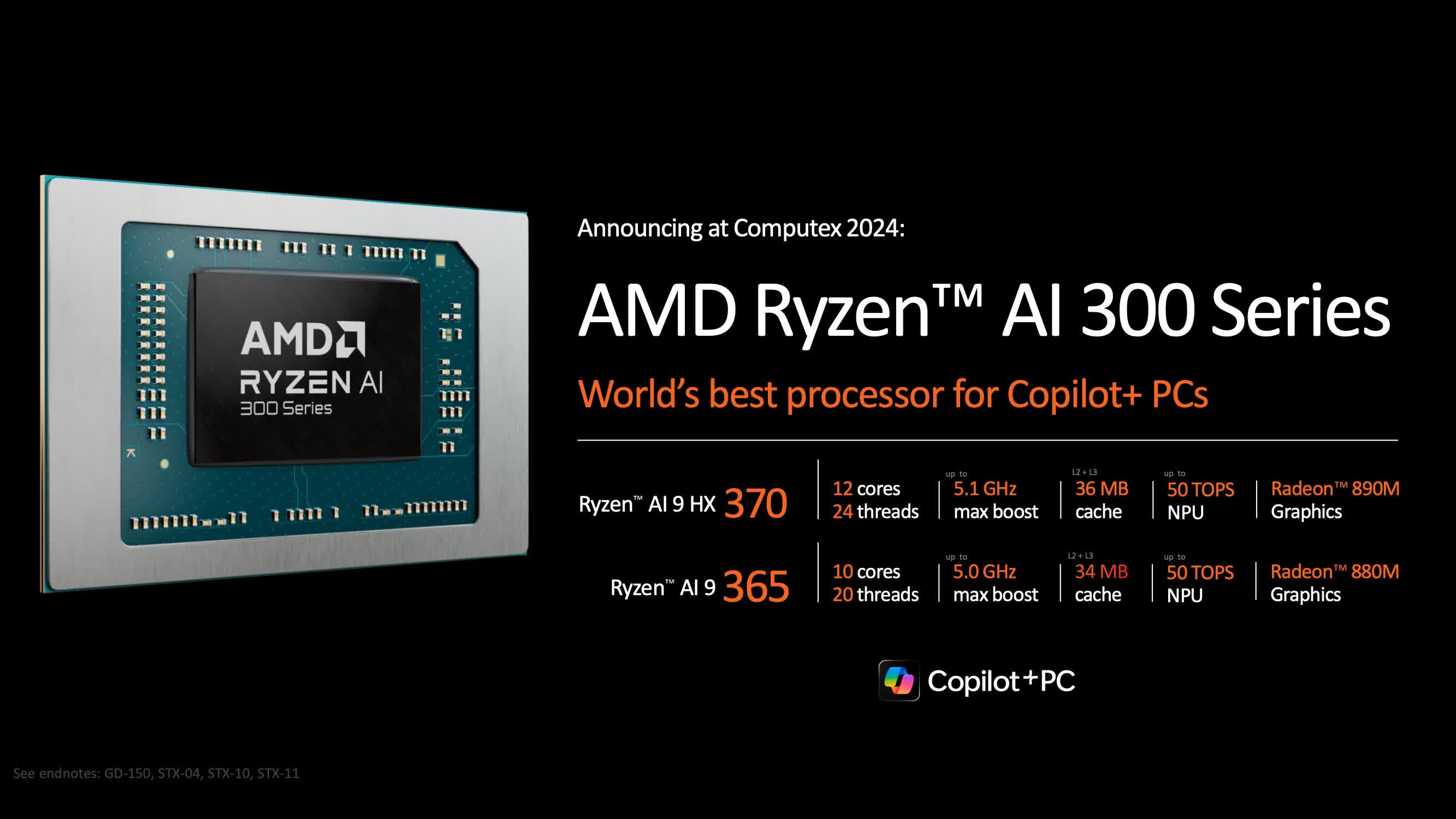

Of course, AMD also had new offerings in the AI PC space – a new series of mobile-focused parts for the new Copilot+ PCs that Microsoft and other partners announced a few weeks ago.

AMD is branding them as the Ryzen AI 300 series to reflect the fact that they are the third generation of laptop chips built by AMD with an integrated NPU. A little-known fact is that AMD’s Ryzen 7040 – announced in January 2023 – was the first chip with built-in AI acceleration, followed by the Ryzen 8040 at the end of last year.

We knew this new chip was coming, but what was somewhat unexpected is how much new technology AMD has integrated into the AI 300 (codenamed Strix Point). It features new Zen 5 CPU cores, an upgraded RDNA 3.5 GPU architecture, and a new NPU built on XDNA2 that offers an impressive 50 TOPs of performance.

What’s also surprising is how quickly laptops with Ryzen AI 300s are coming to market.

What’s also surprising is how quickly laptops with Ryzen AI 300s are coming to market. Systems are expected as soon as next month, just weeks after the first Qualcomm Snapdragon X Copilot+ PCs are set to ship.

One big challenge, however, is that the x86 CPU and AMD-specific NPU versions of the Copilot+ software won’t be ready when these AMD-powered PCs are launched. Apparently, Microsoft didn’t expect x86 vendors like AMD and Intel to be done so soon and prioritized their work for the Arm Qualcomm devices. As a result, these will be Copilot+ “Ready” systems, meaning they’ll need a software upgrade – likely in early fall – to become full-blown next-gen AI PCs.

Still, this vastly sped-up timeframe – which Intel is also expected to announce for their new chips this week – has been impressive to watch. Early in the development of AI PCs, the common thought was that Qualcomm would have a 12 to 18-month lead over both AMD and Intel in developing a part that met Microsoft’s 40+ NPU TOPS performance specs.

The strong competitive threat from Qualcomm, however, inspired the two PC semiconductor stalwarts to move their schedules forward, and it looks like they’ve succeeded.

On the datacenter side, AMD previewed their latest 5th-gen Epyc CPUs (codename Turin) and their Instinct MI-300 series GPU accelerators. As with the PC chips, AMD’s latest server CPUs are built around Zen 5 with competitive performance improvements for certain AI workloads that are up to 5x faster than Intel equivalents, according to AMD.

… as Nvidia did last night, AMD also unveiled an annual cadence for improvements for their GPU accelerator line and offered details up to 2026.

For GPU accelerators, AMD announced the Instinct MI325, which offers twice the HBM3E memory of any card on the market. More importantly, as Nvidia did last night, AMD also unveiled an annual cadence for improvements for their GPU accelerator line and offered details up to 2026.

Next year’s MI350, which will be based on a new CDNA4 GPU compute architecture, will leverage both the increased memory capacity and this new architecture to deliver an impressive 35x improvement versus current cards. For perspective, AMD believes it will give them a performance lead over Nvidia’s latest generation products.

AMD is one of the few companies that’s been able to gain any traction against AMD for large-scale acceleration, so any enhancements to this line of products is bound to be well-received by anyone looking for an Nvidia alternative – both large cloud computing providers and enterprise data centers.

Taken as a whole, the AMD story continues to advance and impress. It’s amazing to see how far the company has come in the last 10 years, and it’s clear they continue to be a driving force in the computing and semiconductor world.

Bob O’Donnell is the president and chief analyst of TECHnalysis Research, LLC, a market research firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow Bob on Twitter @bobodtech