Bottom line: Recent advancements in AI systems have significantly improved their ability to recognize and analyze complex images. However, a new paper reveals that many state-of-the-art visual learning models struggle with simple visual tasks that humans find easy, like counting the number of lines and rows in a grid or how many times two lines intersect.

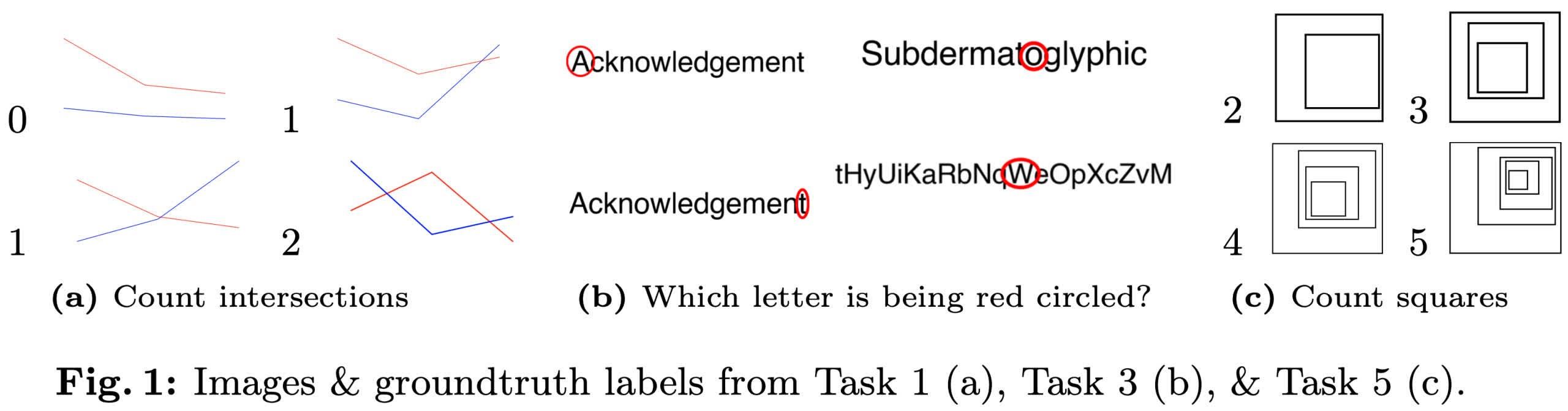

Researchers from Auburn University and the University of Alberta recently published a paper titled “Vision language models are blind.” The study used eight straightforward visual acuity tests to highlight deficiencies in visual learning models (VLM). The tasks included counting intersecting lines, identifying circled letters, counting nested shapes and others. These tests have objectively definitive answers and require minimal knowledge beyond basic 2D shapes.

To avoid models solving these tasks through memorization, the researchers generated the tests using custom code rather than pre-existing images. They evaluated four VLM models, including GPT-4o, Gemini-1.5 Pro, Sonnet-3, and Sonnet-3.5. The results showed that none of the models achieved perfect accuracy, and performance varied significantly depending on the task.

For example, the best-performing model could only count the rows and columns in a blank grid with less than 60 percent accuracy. Conversely, Gemini-1.5 Pro approached human-level performance by correctly identifying circled letters 93 percent of the time.

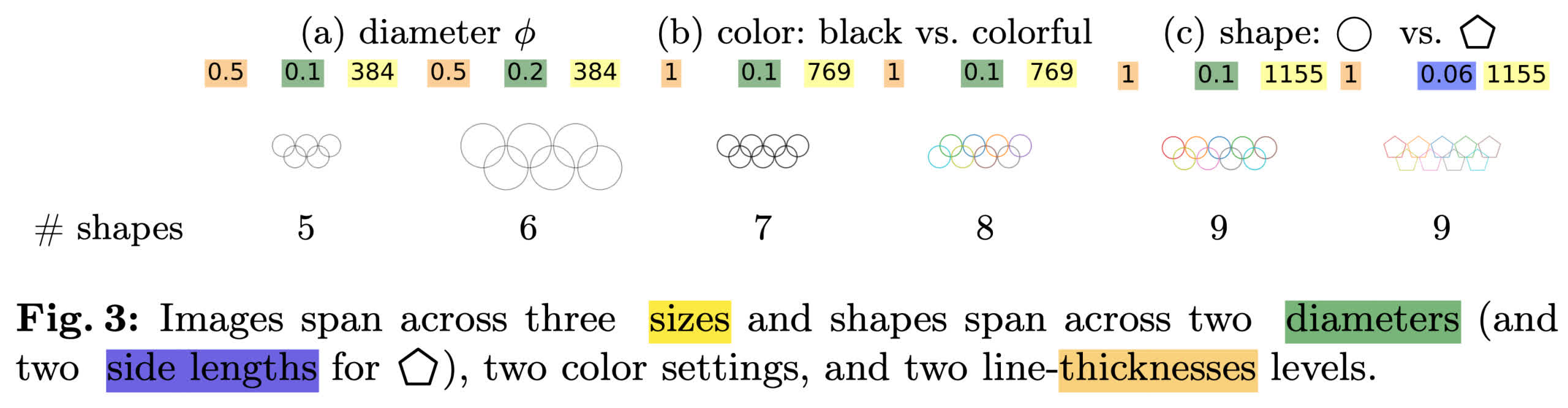

Furthermore, even minor modifications to the tasks resulted in significant performance changes. While all models could correctly identify five overlapping circles, accuracy dropped below 50 percent when the number of circles increased to six or more (above). The researchers theorize that the drop in accuracy might be due to a bias toward the five interlocking rings of the Olympic logo. Some models even provided nonsensical answers, such as “9,” “n,” or “©” for the circled letter in “Subdermatoglyphic” (below).

These findings underscore a significant limitation in the ability of VLMs to handle low-level abstract visual tasks. The behavior is reminiscent of similar capability gaps in large language models, which can generate coherent text summaries but fail basic math and spelling questions. The researchers hypothesized that these gaps might stem from the models’ inability to generalize beyond their training data. However, fine-tuning a model with specific images from one of the tasks (the two circles touching test) only modestly improved accuracy from 17 to 37 percent, indicating that the model overfits the training set but fails to generalize.

The researchers propose that these capability gaps in VLMs might be due to the “late fusion” approach of integrating vision encoders onto pre-trained language models. They suggest that an “early fusion” method, combining visual and language training from the beginning, could improve performance on low-level visual tasks. However, they did not provide an analysis to support this suggestion.

You can view the results and other examples on the team’s website.